How to create AI Agents with LangGraph? Step-by-step guide

Table of Content

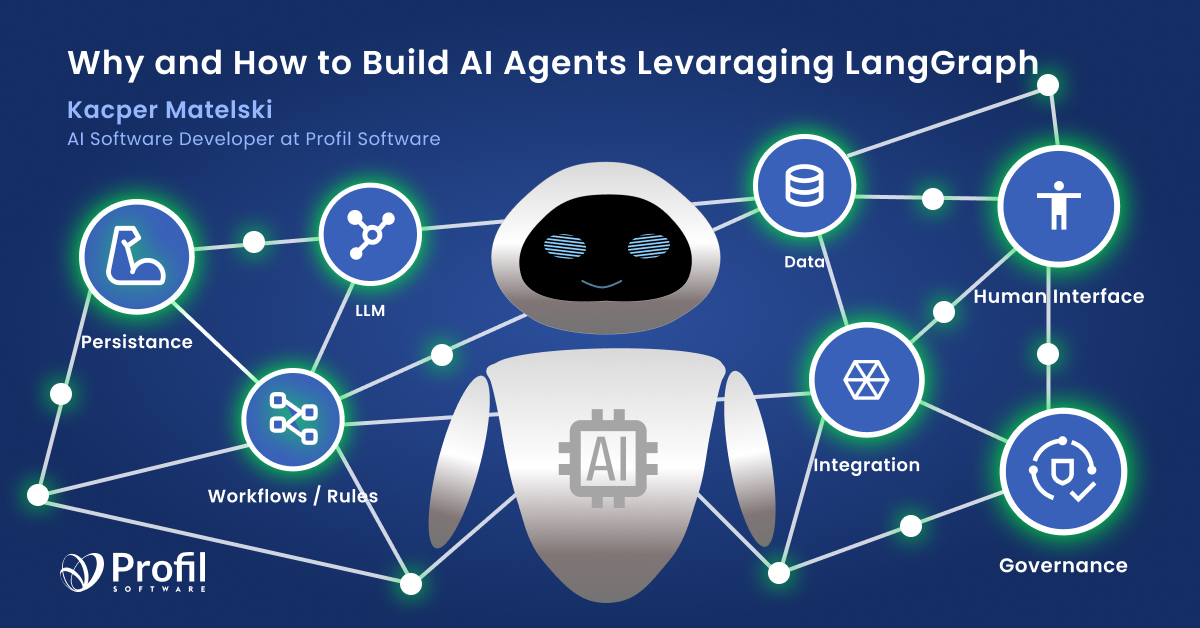

In today’s rapidly evolving technological landscape, AI models capable of generating human-like text, making intelligent decisions, and interacting with the world through sophisticated tools have become increasingly prevalent. These innovations continually permeate our software systems, facilitating efficiency and convenience in our daily lives. Across diverse industries, the integration of AI agents has revolutionized operations, enabling autonomous systems that can reason, plan, and execute complex tasks with minimal human intervention. As these technologies continue to advance, understanding the fundamental concepts behind AI agents and their practical implementation becomes essential for developers and organizations seeking to harness their transformative potential.

Understanding AI Agents: Definition and Importance

AI agents are systems that use an LLM to decide the control flow of an application. This fundamental distinction gives agents a level of autonomy and purpose-driven behavior that standard language models lack.

The concept of agency in AI exists on a spectrum, much like the levels of autonomy in self-driving vehicles. Rather than debating whether a system qualifies as a “true” agent, it’s more productive to consider different degrees to which systems can be agentic. A system becomes more agentic the more an LLM determines how the system behaves — from simple routers that direct inputs to appropriate workflows, to sophisticated state machines capable of complex decision-making sequences.

By themselves, language models can’t take actions — they just output text. Agents solve this limitation by serving as reasoning engines that determine which actions to take and then execute them through specialized tools. After executing these actions, the results can be fed back into the LLM to determine whether more actions are needed or whether the task is complete, creating a powerful feedback loop that enables increasingly complex behaviors.

The Mechanics Behind AI Agents

At their core, AI agents rely on powerful language models, such as transformer-based architectures like GPT or Claude, to process information and make decisions. These models serve as the “brain” of the agent, providing the reasoning capabilities needed to understand queries, determine appropriate actions, and generate coherent responses.

Tool calling, otherwise known as function calling, is the interface that allows artificial intelligence agents to work on specific tasks that require up-to-date information, otherwise unavailable to the trained large language models. This mechanism enables agents to interact with external systems, databases, APIs, and other computational resources that extend their capabilities far beyond the knowledge contained in their training data.

The control flow of an agent typically follows a sequence of steps. First, the agent receives a query or instruction from a user. Next, it uses its language model to reason about what actions would be appropriate to fulfill the request. Then, it selects and calls the relevant tools with specific parameters. Finally, it processes the results of those tool calls to either formulate a response or determine additional actions to take.

Memory represents another crucial component of sophisticated agents. By maintaining a record of previous interactions and decisions, agents can build context over time, enabling more coherent multi-turn conversations and increasingly appropriate responses based on accumulated knowledge.

Types of Agent Architectures

Various architectural approaches have emerged for building effective AI agents, each with distinct characteristics suited to different use cases:

ReAct (Reasoning + Acting) represents one of the most widely adopted frameworks for agent development. This approach interleaves reasoning steps with action execution, allowing the agent to think about what to do, take actions, observe the results, and then continue reasoning based on those observations. This creates a dynamic problem-solving process that mimics human cognitive patterns.

Plan-and-execute agents operate by first developing a comprehensive plan for tackling a problem, then methodically executing each step of that plan. This approach excels at handling complex, multi-stage tasks that benefit from strategic planning before action.

Multi-agent systems involve multiple specialized agents collaborating to achieve common goals. By distributing tasks among agents with different capabilities or knowledge domains, these systems can handle more complex problems than single agents alone.

Self-ask agents incorporate a self-questioning mechanism where the agent actively identifies gaps in its knowledge and formulates questions to fill those gaps before proceeding. This approach enhances the agent’s problem-solving capabilities through metacognitive processes.

Critique-revise agents implement feedback loops where one component generates solutions while another evaluates and critiques those solutions, leading to iterative improvements. This architecture is particularly effective for creative tasks and quality-sensitive applications.

Real-World Applications and Success Stories

AI agents have demonstrated remarkable versatility across industries, solving complex problems and enhancing productivity in numerous contexts:

LinkedIn has developed an AI recruiter that streamlines hiring processes through conversational search and candidate matching. Their hierarchical agent system, powered by LangGraph, has transformed recruitment workflows by automating routine tasks while maintaining high-quality candidate selection.

At Uber, the Developer Platform team leveraged LangGraph to build a network of agents that automate unit test generation for large-scale code migrations. This system significantly accelerates development cycles while maintaining code quality standards.

Klarna’s AI Assistant handles customer support tasks for 85 million active users, reducing customer resolution time by 80%. Built using LangGraph and LangSmith, this agent-based system demonstrates how AI can transform customer service operations at scale.

Replit has created an AI agent that helps developers generate code and ship applications rapidly. Their multi-agent system exposes agent actions to users and supports human-in-the-loop processes, striking a balance between automation and human oversight.

Building Your First AI Agent with LangGraph

In this simple example, we’ll craft a Python script showcasing key features of an AI agent, leveraging the LangChain framework with its powerful agent capabilities of LangGraph.

Let’s start with defining state and tools for our LLM.

And now we can build the graph of our agent.

We need to add memory to the agent to give it the ability to remember the context of the conversation.

This example demonstrates how to create a simple but powerful AI agent that can search for information and maintain conversation state across multiple interactions.

Challenges and Best Practices in Agent Development

While AI agents offer tremendous potential, their development comes with significant challenges. Ensuring that agents behave reliably, ethically, and transparently requires careful design and extensive testing. Agents might sometimes take unexpected actions, misinterpret user intentions, or struggle with complex reasoning tasks.

To address these challenges, several best practices have emerged:

Human-in-the-loop mechanisms provide critical oversight, allowing humans to review and approve agent actions before execution. This approach is especially important for high-stakes applications where errors could have significant consequences.

Structured tool design helps constrain agent behavior within appropriate boundaries while providing clear interfaces for interacting with external systems. Well-designed tools can prevent many common failure modes while enhancing agent capabilities.

Comprehensive testing and evaluation frameworks help identify and address issues before deployment. Tools like LangSmith provide end-to-end trace observability, tool selection visibility, and detailed performance metrics that accelerate debugging and optimization.

The Future of AI Agents

Natural language processing models keep transforming our reality, and AI agents represent one of the most promising frontiers in this evolution. As these technologies mature, we can expect to see increasingly sophisticated agents that combine multiple capabilities, collaborate effectively with humans and other agents, and tackle increasingly complex tasks across domains.

The adoption of AI agents continues to accelerate, with 51% of organizations already using agents in production and 78% having active plans to implement them soon. Mid-sized companies with 100–2000 employees have been particularly aggressive in deploying agent technologies, with 63% already having agents in production.

By fostering responsible development and utilization of AI agents, we can harness their full potential to empower individuals and organizations. These technologies catalyze positive change when deployed thoughtfully, with appropriate human oversight and ethical guardrails. The future of AI agents lies not just in their autonomous capabilities, but in their ability to augment human intelligence, creativity, and productivity in ways that create value while respecting human agency.

As we continue to explore and expand the possibilities of AI agents, the collaboration between human intelligence and artificial reasoning engines will unlock new frontiers of innovation and problem-solving capacity, reshaping how we interact with technology and each other in profound ways.